Research

Machine learning for Predictive Monitoring of Cyber-Physical Systems

Formal verification of Cyber-Physical Systems (CPSs) typically amount to model checking of some formal model (a hybrid automaton) of the CPS. However, this task is computationally expensive, and so is limited to offline analysis. Moreover, the strong guarantees of model checking often cease to hold at runtime due to uncertain operating environments and complex processes that are hard to model precisely.

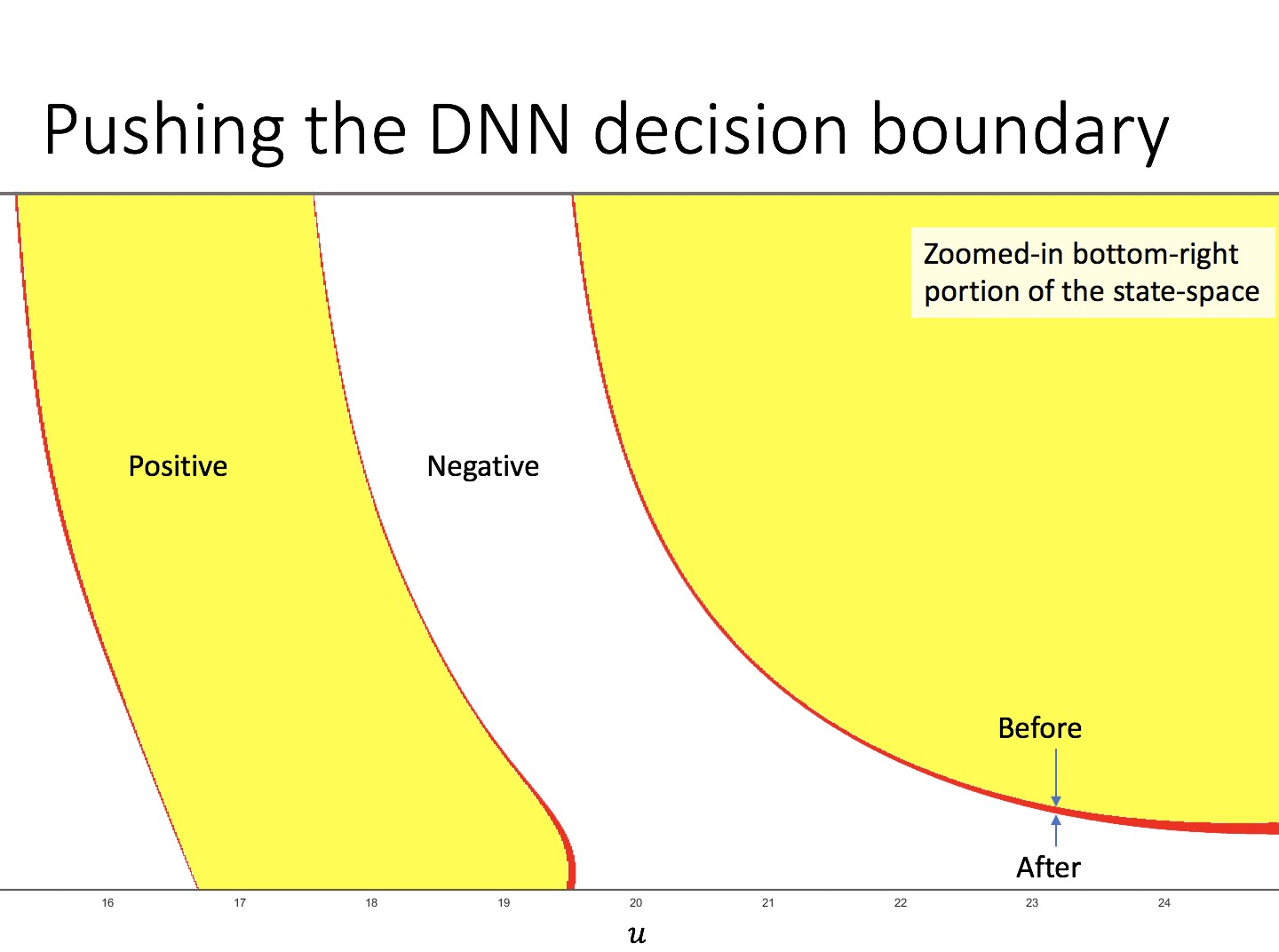

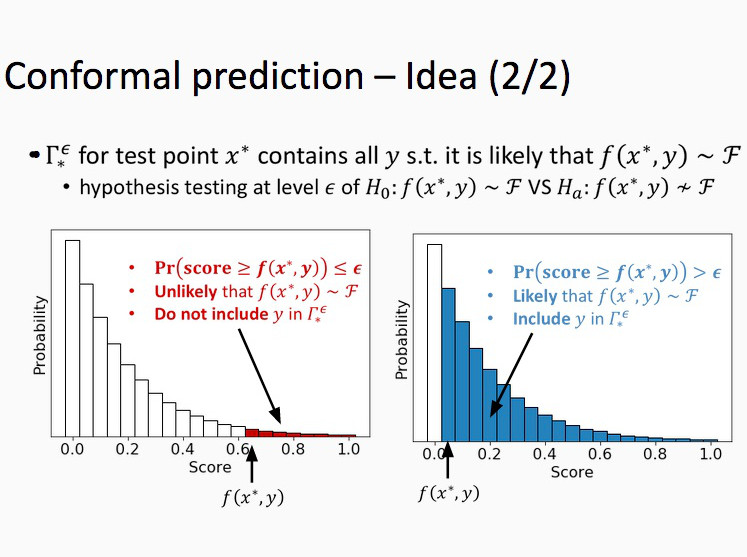

We focus on the predictive monitoring of CPSs, i.e., the problem of predicting at runtime if a safety violation can be reached from the current CPS state. In order to provide scalable and accurate predictive monitors, we propose Neural Predictive Monitoring (NPM) a machine learning-based approach based on learning reachability predictors described by deep neural networks, aka neural state classifiers, using supervision from a hybrid automata reachability checker. To prevent potentially harmful prediction error, NPM includes methods to quantify the predictive uncertainty of the monitor, and uses these uncertainty measures to derive rejection criteria that optimally recognize such errors, without knowing the true reachability value. Finally, NPM includes an active learning method that uses such uncertainty measures to re-train and improve the monitor and the rejection rule where it most matters, i.e., on the uncertain states. NPM empirically shows great performance: it is efficient, with computation times on the order of milliseconds, and effective, with highly accurate monitors and high error recognition rates.

Synthesizing Stealthy Attacks on Cardiac Devices

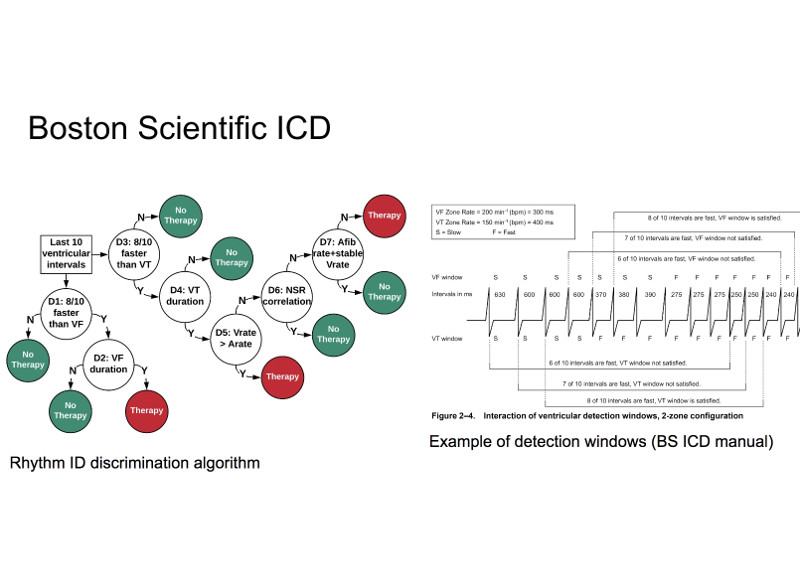

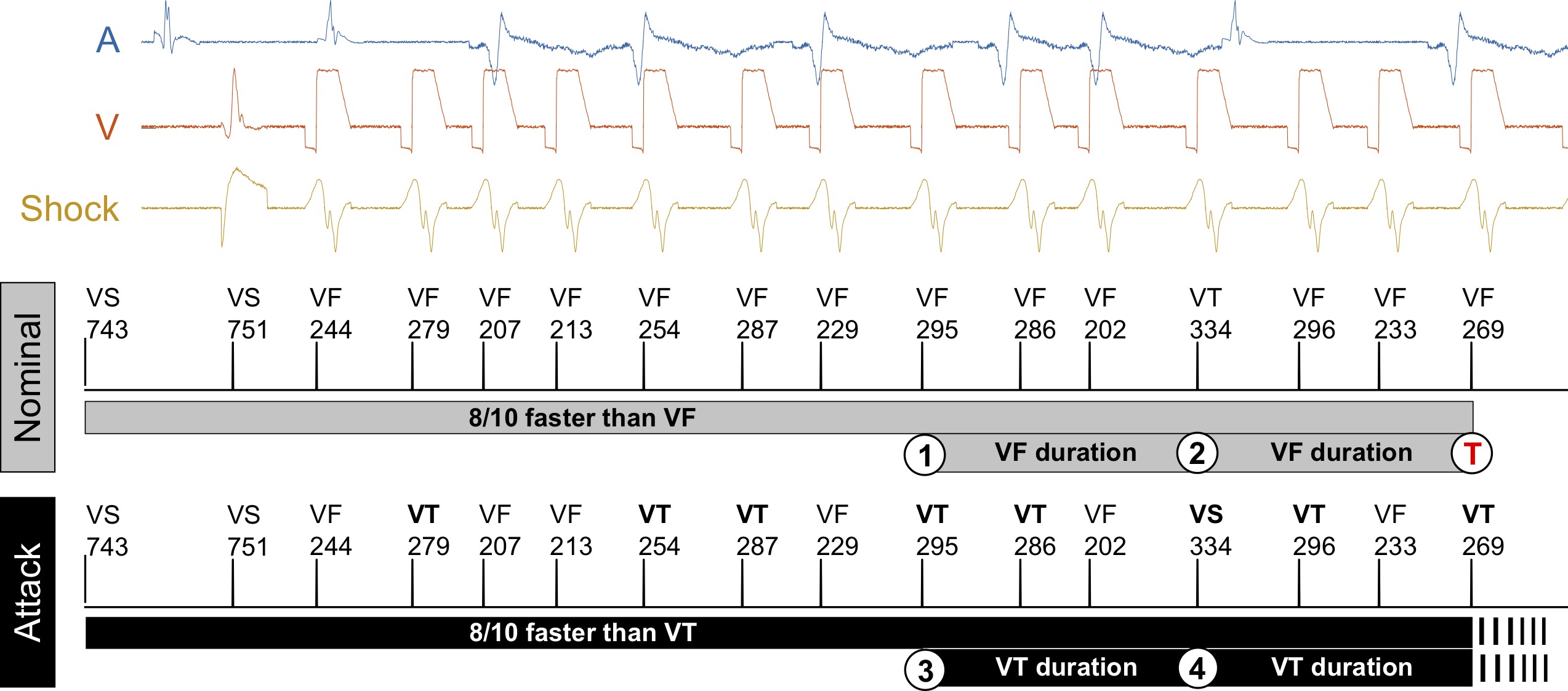

An Implantable Cardioverter Defibrillator (ICD) is a medical device for the detection of fatal cardiac arrhythmias and their treatment through electrical shocks intended to restore normal heart rhythm. An ICD reprogramming attack seeks to alter the device parameters to induce unnecessary therapy or prevent required therapy.

We present a formal approach for the synthesis of ICD reprogramming attacks that are both effective, i.e., lead to fundamental changes in the required therapy, and stealthy, i.e., are hard to detect. We focus on the discrimination algorithm underlying Boston Scientific devices (one of the principal ICD manufacturers) and formulate the synthesis problem as one of multi-objective optimization. Our solution technique is based on an Optimization Modulo Theories (OMT) encoding of the problem and allows us to derive device parameters that are optimal with respect to the effectiveness-stealthiness tradeoff. Our method can be tailored to the patients current condition, and generalizes to unseen signals.

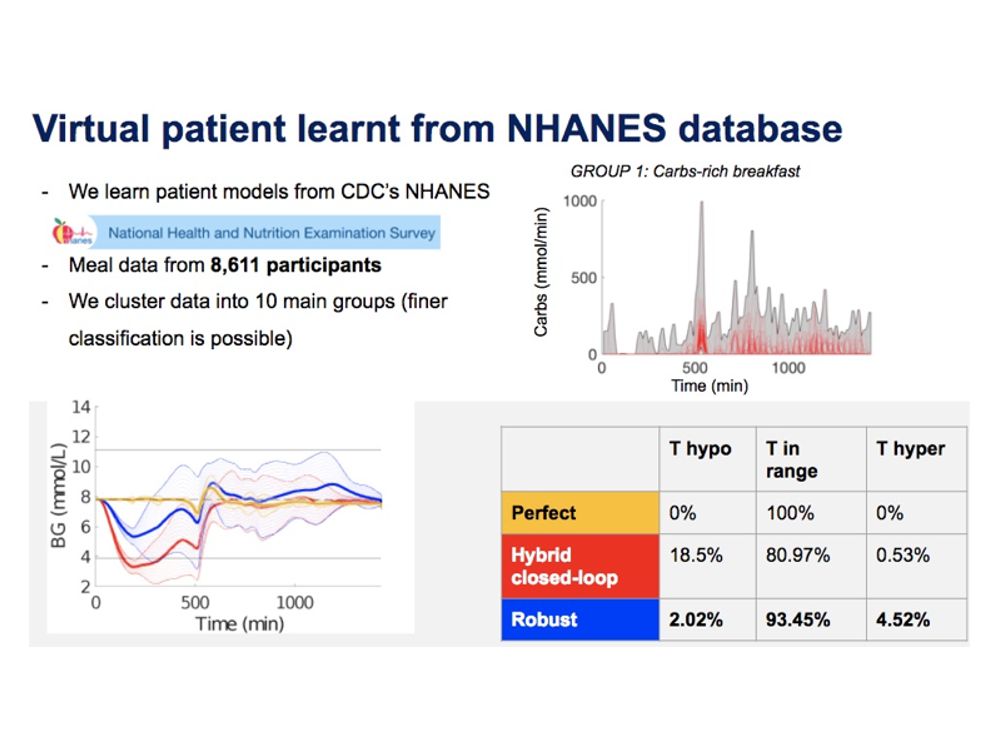

Data-driven robust control for insulin therapy

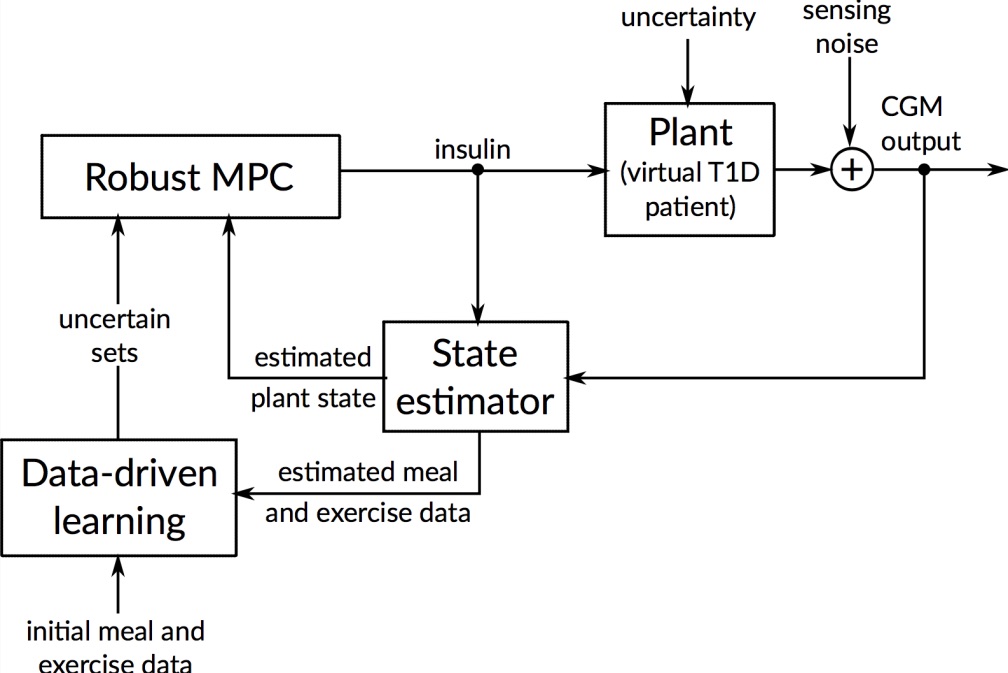

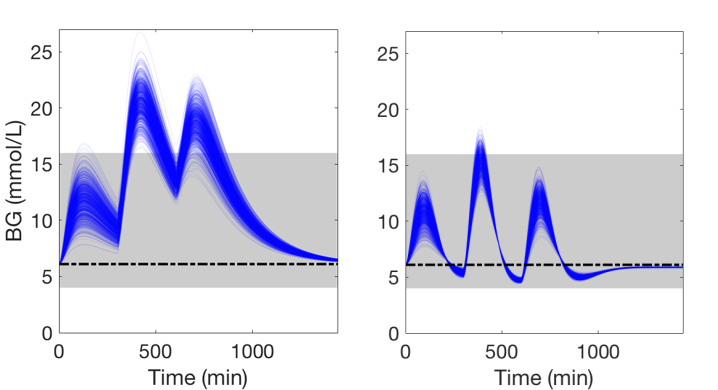

The artificial pancreas aims to automate treatment of type 1 diabetes (T1D) by integrating insulin pump and glucose sensor through control algorithms. However, fully closed-loop therapy is challenging since the blood glucose levels to control depend on disturbances related to the patient behavior, mainly meals and physical activity.

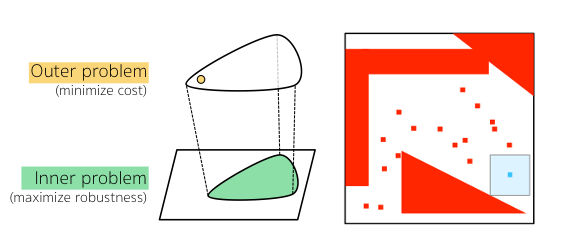

To handle meal and exercise uncertainties, in our work we construct data-driven models of meal and exercise behavior, and develop a robust model-predictive control (MPC) system able to reject such uncertainties, in this way eliminating the need for meal announcements by the patient. The data-driven models, called uncertainty sets, are built from data so that they cover the underlying (unknown) distribution with prescribed probabilistic guarantees. Then, our robust MPC system computes the insulin therapy that minimizes the worst-case performance with respect to these uncertainty sets, so providing a principled way to deal with uncertainty. State estimation follows a similar principle to MPC and exploits a prediction model to find the most likely state and disturbance estimate given the observations.

We evaluate our design on synthetic scenarios, including high-carbs intake and unexpected meal delays, and on large clusters of virtual patients learned from population-wide survey data sets (CDC NHANES).

SMT-based synthesis of safe and robust digital controllers

We present a new method for the automated synthesis of digital controllers with formal safety guarantees for systems with nonlinear dynamics, noisy output measurements, and stochastic disturbances. Our method derives digital controllers such that the corresponding closed-loop system, modeled as a sampled-data stochastic control system, satisfies a safety specification with probability above a given threshold. The method alternates between two steps: generation of a candidate controller (sub-optimal but rapid to generate), and verification of the candidate. The candidate is found by maximizing a Monte Carlo estimate of the safety probability, and by simulating the system with a non-validated ODE solver. To rule out unstable candidate controllers, we prove and utilize Lyapunov indirect method for instability of sampled-data nonlinear systems. In the verification step, we use a validated solver based on SMT to compute a numerically and statistically valid confidence interval for the safety probability of the candidate controller. If such probability is not above the threshold, we expand the search space for candidates by increasing the controller degree. We evaluate our technique on three case studies: an artificial pancreas model, a powertrain control model, and a quadruple-tank process.

Committed Moving Horizon Estimation for Meal Detection

We introduce Committed Moving Horizon Estimation (CHME), a model-based technique for detecting and estimating unknown random disturbances in control systems. We investigate CHME in the context of meal detection and estimation method for the treatment of type 1 diabetes, where we are interested in automatically detecting the occurrence and estimate the amount of carbohydrate (CHO) intake from glucose sensor data. Meal detection and estimation is crucial in closed-loop insulin control as it can eliminate the need for manual meal announcements by the patient.

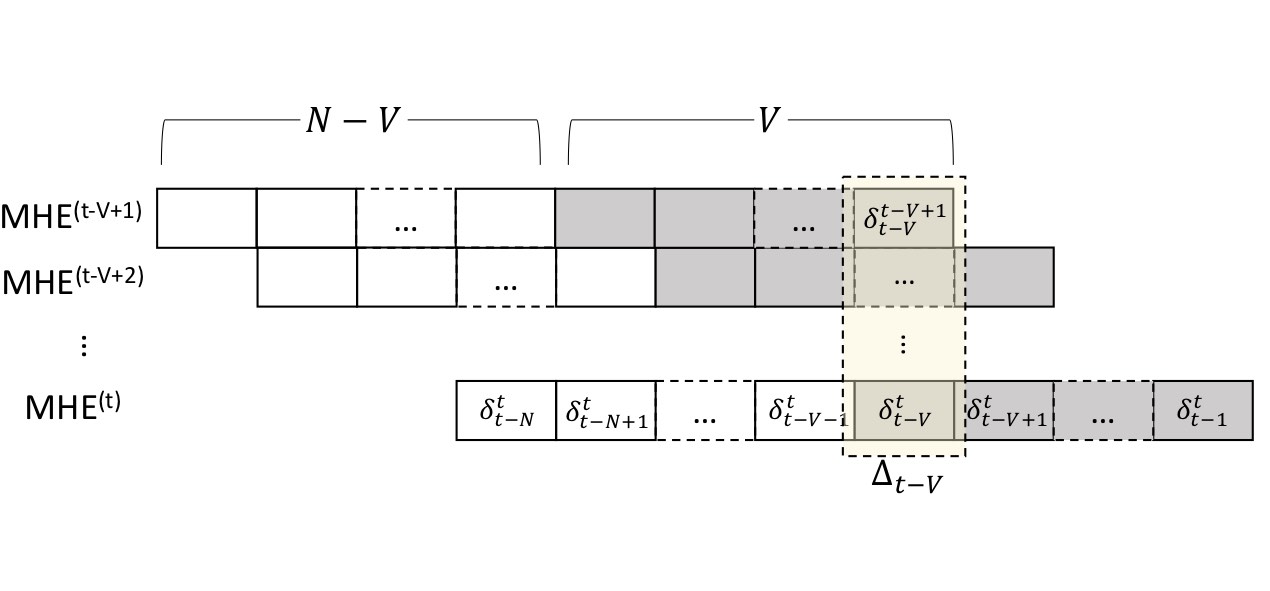

CMHE extends Moving Horizon Estimation, which alone, is not well-suited for disturbance estimation and meal detection. Indeed, accurate disturbance estimation needs both look-ahead (awareness of the disturbance effect on future system outputs) and history (past outputs to discriminate the start of the disturbance). For this purpose, CMHE aggregates the meal disturbances estimated by multiple MHE instances to balance future and past information at decision time, thus providing timely detection and accurate estimation. Applied to the detection of random meals from glucose sensor data, our method achieves an 88.5% overall detection rate and a 100% detection rate for the main meals (i.e., excluding snacks).

Attacking ECG biometrics

With the increasing popularity of mobile and wearable devices biometric recognition has become ubiquitous. Unlike passwords, which rely on the user knowing something, biometrics make use of either distinctive physiological properties or behavior.

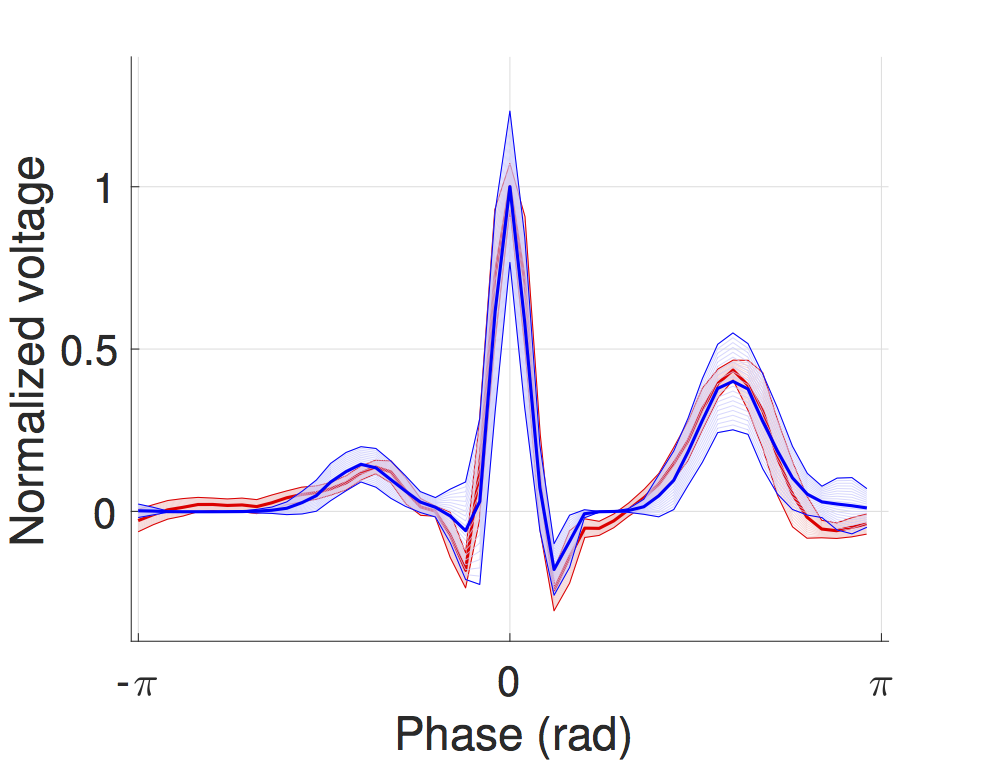

In this work, we study a systematic attack against ECG biometrics, i.e., that leverage the electrocardiogram signal of the wearer, and evaluate the attack on a commercial device, the Nymi band. We instantiated the attacks using different techinques: a hardware-based waveform generator, a computer sound card, and the playback of ECG signals encoded as .wav files using an off-the-shelf audio player.

We collected training data from 40+ participants using a variety of ECG monitors, including a medical monitor, a smartphone-based mobile monitor and the Nymi Band. Then, we enrolled users into the Nymi Band and test whether data from any of the above ECG sources can be used for a signal injection attack. Using data collected directly on the Nymi Band, we achieve a success rate of 81%, which decreases to 43% with data from other devices.

To improve the success rate with data from other devices, available to the attacker through e.g. medical records of the victim, we devise a method for learning optimal mappings between devices (using training data), i.e., functions that transforms the ECG signal of one device as if it was produced by another device. Thanks to this method, we achieved a 62% success rate with data not produced by the Nymi band.

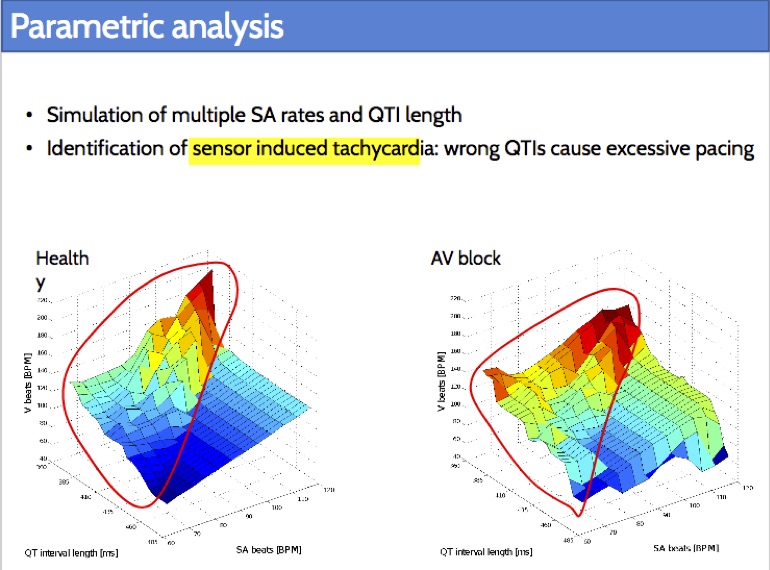

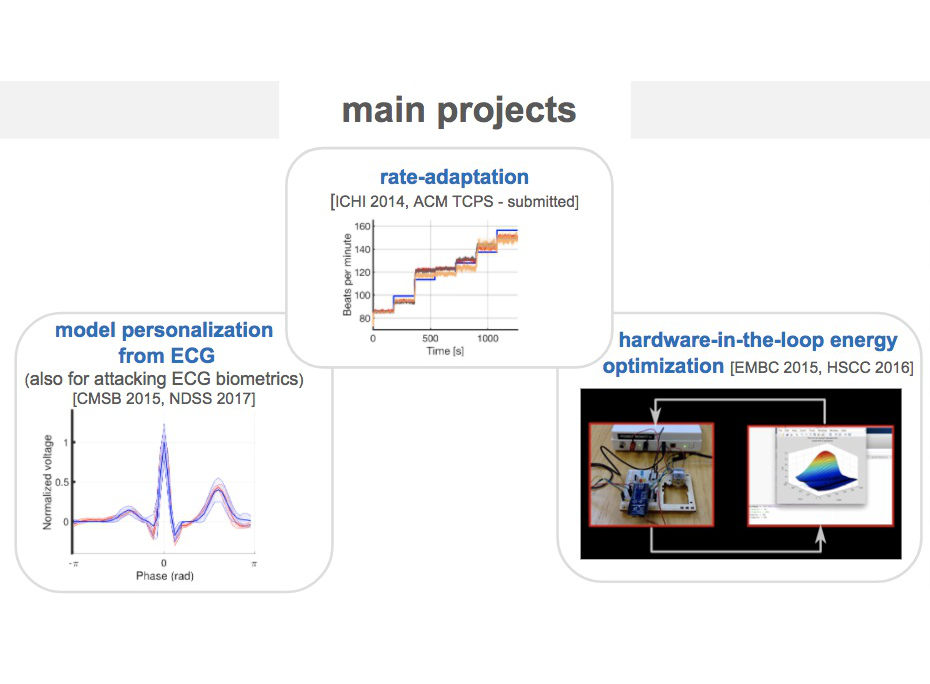

Closed-loop quantitative verification of rate-adaptive pacemakers

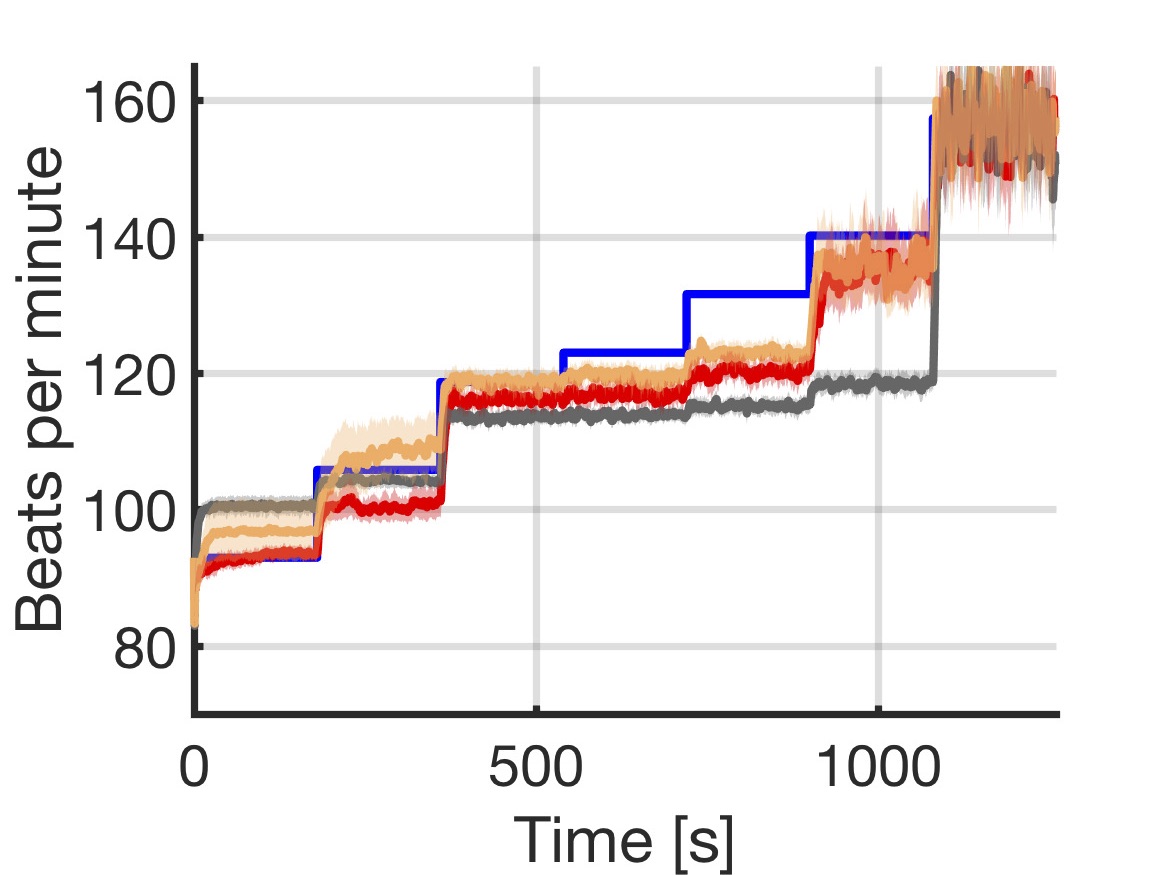

Rate-adaptive (RA) pacemakers are able to adjust the pacing rate depending on the levels of activity of the patient, detected by processing physiological signals data. RA pacemakers represent the only choice when the heart rate cannot naturally adapt to increasing demand (e.g. AV block). RA parameters depend on many patient-specific factors, and effective personalisation of such treatments can only be achieved through extensive exercise testing, which is normally intolerable for a cardiac patient.

We introduce a data-driven and model-based approach for the quantitative verification of RA pacemakers and formal analysis of personalised treatments. We developed a novel dual-sensor pacemaker model where the adaptive rate is computed by blending information from an accelerometer, and a metabolic sensor based on the QT interval (a feature of the ECG). The approach builds on the HeartVerify tool to provide statistical model checking of the probabilistic heart-pacemaker models, and supports model personalization from ECG data (see heart model page). Closed-loop analysis is achieved through the online generation of synthetic, model-based QT intervals and acceleration signals.

We further extend the model personalization method to estimate parameters from patient population, thus enabling safety verification of the device. We evaluate the approach on three subjects and a pool of virtual patients, providing rigorous, quantitative insights into the closed-loop behaviour of the device under different exercise levels and heart conditions.

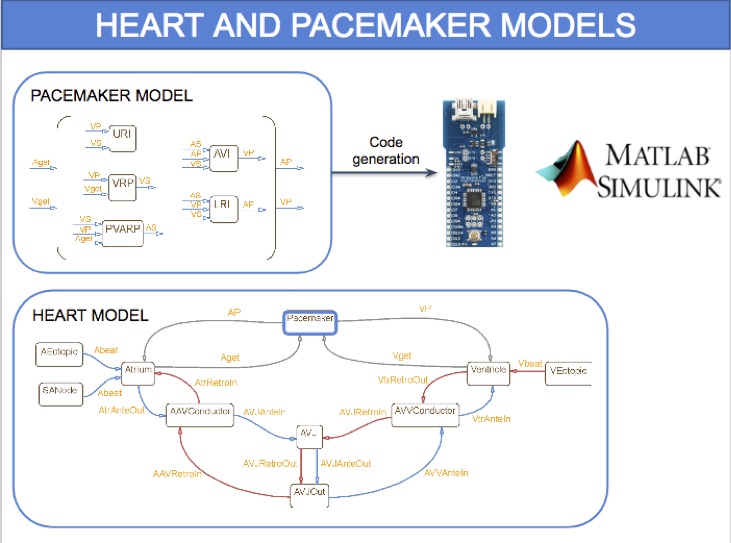

HeartVerify: Model-Based Quantitative Verification of Cardiac Pacemakers

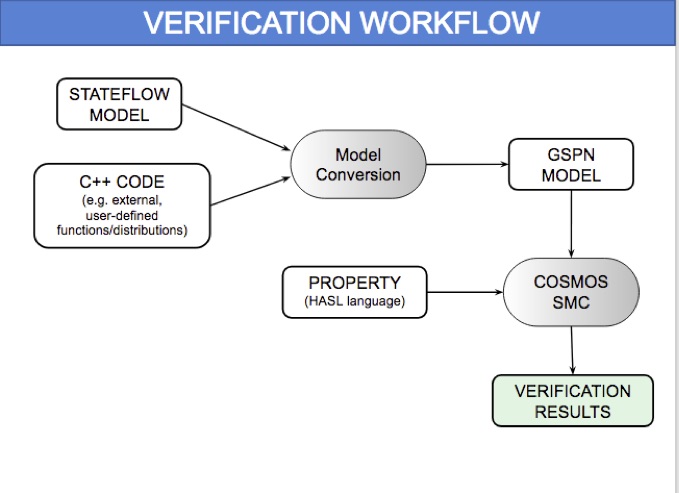

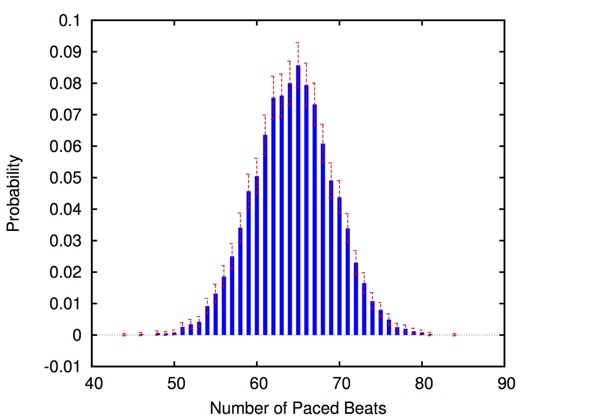

HeartVerify is a framework for the analysis and verification of pacemaker software and personalised heart models. Models are specified in MATLAB Stateflow and are analysed using the Cosmos tool for statistical model checking, thus enabling the analysis of complex nonlinear dynamics of the heart, where precise (numerical) verification methods typically fail.

The approach is modular in that it allows configuring and testing different heart and pacemaker models in a plug-and-play fashion, without changing their communication interface or the verification engine. It supports the verification of probabilistic properties, together with additional analyses such as simulation, generation of probability density plots (see figure) and parametric analyses. HeartVerify comes with different heart and pacemaker models, including methods for model personalization from ECG data and rate-adaptive pacing.

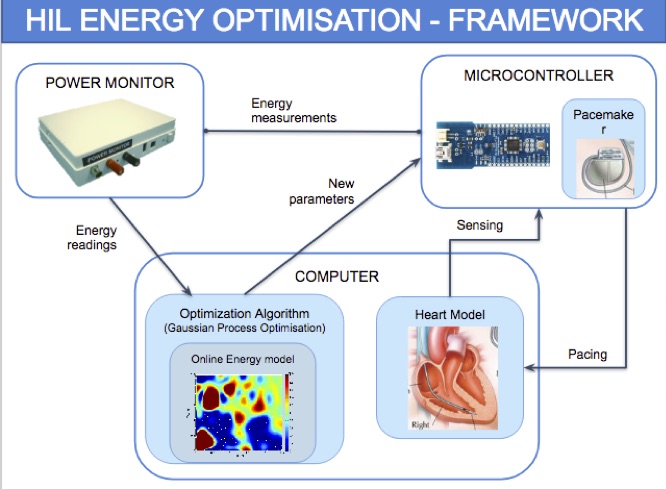

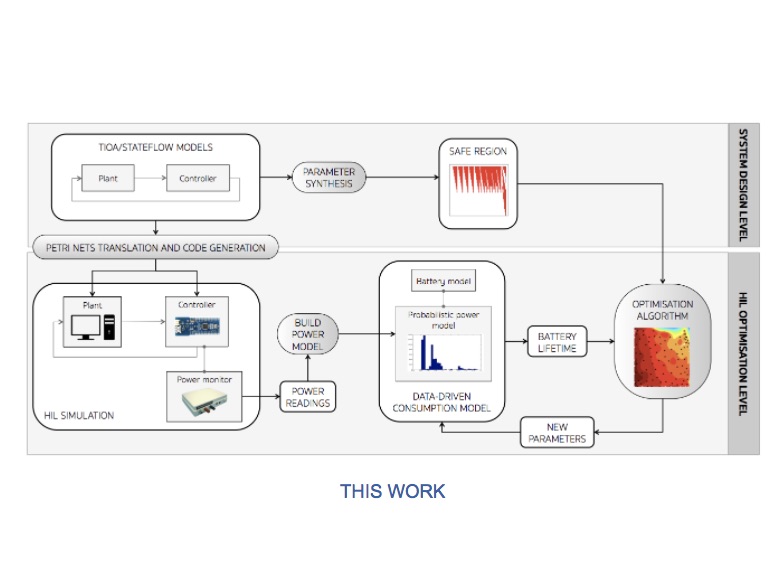

Hardware-in-the-loop energy optimization

Energy efficiency of cardiac pacemakers is crucial because it reduces the frequency of device re-implantations, thus improving the quality of life of cardiac patients.

To achieve effective energy optimisation, we take a hardware-in-the-loop (HIL) simulation approach: the pacemaker model is encoded into hardware and interacts with a computer simulation of the heart model. In addition, a power monitor is attached to the pacemaker to provide real-time power consumption measurements. The approach is model-based and supports generic control systems. Controller (e.g., pacemaker) and plant (e.g., heart) models are specified as networks of parameterised timed input/output automata (using MATLAB Stateflow) and translated into executable code.

We realise a fully automated optimisation workflow, where HIL simulation is used to build a probabilistic power consumption model from power measurement data. The obtained model is used by the optimisation algorithm to find optimal pacemaker parameters that e.g., minimise consumption or maximise battery lifetime. We additionally employ parameter synthesis methods to restrict the search to safe parameters. We employ timed Petri nets as an intermediate representation of the executable specification, which facilitates efficient code generation and fast simulations.

Probabilistic timed modelling of cardiac dynamics and personalization from ECG

We developed a timed automata translation of the IDHP (Integrated Dual-chamber Heart and Pacer) model by Lian et al. Timed components realize the conduction delays between functional components of the heart and action potential propagation between nodes is implemented through synchronisation between the involved components. In this way, the model can be easily extended with other accessory conduction pathways in order to reproduce specific heart conditions.

The IDHP model can be also parametrised from patient electrocardiogram (ECG) in order to reproduce the specific physiological characteristics of the individual. For this purpose, we extended the model in order to generate synthetic ECG signals that reflect the heart events occurring at simulation time. Starting from raw ECG recordings, our method automatically finds model parameters such that the synthetic signal best mimics the input ECG, by minimising the statistical distance between the two. The resulting parameters correspond to probabilistic delays in order to reflect variability of ECG features.

The IDHP heart model can be downloaded from the tool page, while personalization from ECG data is implemented in the HeartVerify tool.

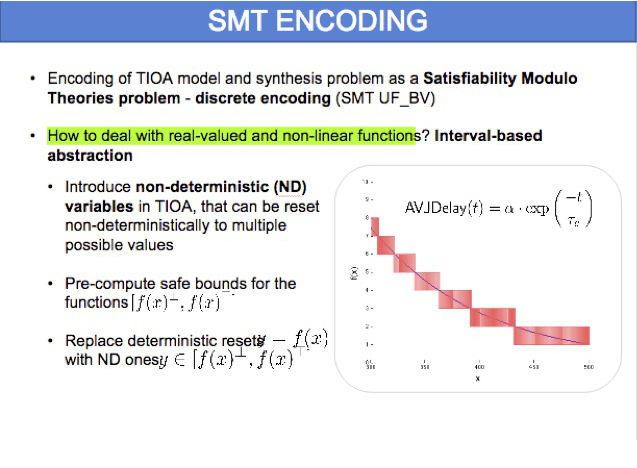

Parameter synthesis for pacemaker design optimization

Verification is useful in establishing key correctness properties of cardiac pacemakers, but has limitations, in that it is not clear how to redesign the model if it fails to satisfy a given property. Instead, parameter synthesis aims to automatically find optimal values of parameters to guarantee that a given property is satisfied.

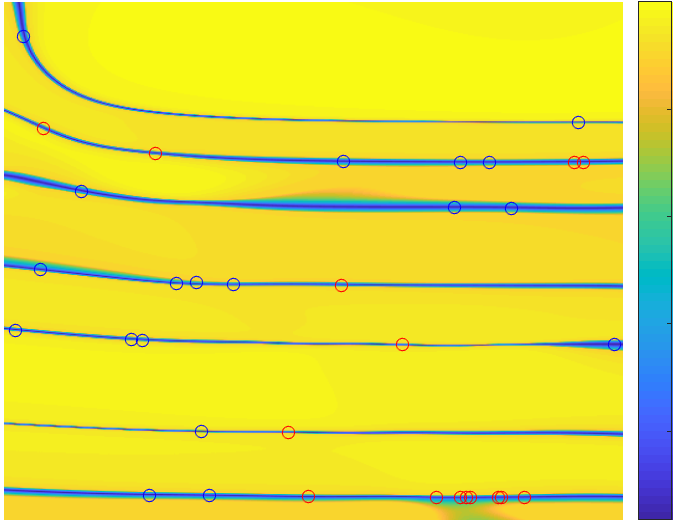

In this project, we develop methods to synthesize pacemaker parameters that are safe, robust and, at the same time, able to optimise a given quantitative requirement such as energy consumption or clinical indicators. We solve this problem by combining symbolic methods (SMT solving) for ruling out parameters that violate heart safety (red areas in the figure) with evolutionary strategies for optimising the quantitative requirement.